Anyway, before moving further I want to emphasize that assertion has a different goal compared to runtime error handling. Assertion is meant to be used to catch logic error in your code during development, before the program/executable is released/used in operational environment. Therefore, this post doesn't concern the use of assertion. This post will focus on runtime errors caused by "invalid" state of system resources, such as non-existing file, failure to allocate heap memory, etc. Preliminary information on when to use assertion can be found over at MSDN: Errors and Exception Handling (Modern C++). The MSDN article is rather centered on Microsoft-platform. But, the principles explained in it are applicable to any C/C++ code.

Lets get back to the main theme: runtime error handling interface between C++11 and C code. MSDN provided a sample solution to the problem as well: How to: Interface Between Exceptional and Non-Exceptional Code. Unfortunately, the sample provided by MSDN still doesn't use C++11 smart pointer to manage the file HANDLE resource that it uses. Moreover, it's Windows-centric. Therefore, it's not a "pure" C++11 solution yet. However, the idea presented by the MSDN sample is profound and has been adopted in the code for my previous post about using custom deleter.

The basic idea for runtime error handling in C and C++ is different:

- In C, you have the errno variable from the standard C library or if your code runs in Windows you can query the error code via GetLastError(). Because I'm trying to be platform independent, let's focus on using errno. In C, your code checks the value of the errno variable after a call to a C library function to check for runtime error. Side note: This C runtime error approach is akin to "side-band" signaling in hardware protocol because you don't get the full picture from the return value of the called function. Instead, you need to check other variable via different means.

- In C++, your code should be using exception as the mechanism to propagate error "up-the-stack" until there is a "handler" that can handle the error. If the error is unhandled, the standard behavior is to call std::terminate which normally terminate the application.

Looking at the two different mechanisms for runtime error handling in both C and C++, you must have come up with the answer: wrap the C error code into C++ exception. I provide a sample code that shows how to wrap C error code into C++ exception at https://bitbucket.org/pinczakko/custom-c-11-deleter--it's an updated version of my C++11 custom deleter sample code. Feel free to clone it. The rest of this post explains the code in that Bitbucket URL.

The steps to wrap C error code into C++11 exception are:

The steps to wrap C error code into C++11 exception are:

- Create an exception class that derives from runtime_error class.

- Store the error code/number in that exception class.

- Create a method in that exception class that transforms the error code into human readable error message (string).

- Throw an object of the exception class type in places where a runtime error might occur.

- Catch the exception in the right place in your code.

The preceding steps are not difficult. Lets examine the sample code in more detail to understand the steps.

The exception class is FileHandlerException. It's defined as follows:

The exception class is FileHandlerException. It's defined as follows:

class FileHandlerException : public runtime_error

{

public:

explicit FileHandlerException(int errNo, const string& msg):

runtime_error(FormatErrorMessage(errNo, msg)), mErrorNumber(errNo) {}

int GetErrorNo() const {

return mErrorNumber;

}

private:

int mErrorNumber;

};

The FileHandlerException class is derived from runtime_error class--the latter is part of stdlibc++ in C++11. The FileHandlerException class uses its mErrorNumber member to store the error code (errno value) obtained from C. The FormaErrorMessage() function is a custom function that transforms errno value into human-readable string via the C strerror() function. This is FormaErrorMessage() function implementation:

string FormatErrorMessage(int errNo, const string& msg)

{

static const int BUF_LEN = 1024;

vector<char> buf(BUF_LEN);

strncpy(buf.data(), strerror(errNo), BUF_LEN - 1);

return string(buf.data()) + " (" + msg + ") ";

}

As you see, it's not difficult to implement the wrapper for C runtime error code. Lets proceed to see how the exception class is being used.

explicit FileHandler (const char* path, const char* mode)

try:

mPath{path}, mMode {mode}

{

cout << "FileHandler constructor" << endl;

FILE* f = fopen(path, mode);

if (f != NULL) {

unique_ptr<FILE, int (*)(FILE*)> file{f, closeFile};

mFile = std::move(file);

} else {

throw FileHandlerException(errno, "Failed to open " + string(path));

}

} catch (FileHandlerException& e) {

throw e;

}

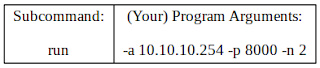

In the preceding code, if the call to fopen() failed to produce a usable FILE object, an exception object of type FileHandlerException is initialized and thrown. The catch part of the code simply re-throw the exception object higher-up the stack. The code that finally catches the exception object is shown below.try {

DeleterTest::FileHandler f(argv[1], "r");

//.. irrelevant code omitted

} catch (DeleterTest::FileHandlerException& e) {

cout << "Error!!!" << endl;

cout << e.what() << endl;

cout << "errno: " << e.GetErrorNo() << endl;

}

The final "handler" of the exception object simple shows the error string associated with the runtime error, i.e. what causes the failure to obtain a valid FILE pointer.One final note about exception support in C++11: There is no comprehensive support for Unicode character set yet. I've looked up the web for explanation on the matter but all of them have the same conclusion. Please comment below if you know better answer or update to the problem.

Hopefully, this post is useful for those doing mixed C and C++ code development.